Workshops

Poznan / Torun / Warsaw

POZNAN

Weather modeling workshop // Dr. Mateusz Taszarek // UAM Poznań // 8-9.06.2019

The workshop, conducted by Dr. Mateusz Taszarek of the Department of Meteorology and Climatology at the Adam Mickiewicz University, posed the question of the relationship between a physical phenomenon and a simulation based on mathematical modeling. The case study on which the deliberations of the workshop participants were based was a digital model that allows the simulation of tornado processes.

Due to the very specific topic, the workshop was divided into two parts – the first dealt with the physical and meteorological phenomena in the atmosphere that ultimately result in tornadoes – particularly in the US states of Texas, Oklahoma, Kansas, Nebraska, Iowa, and South Dakota (the so-called Tornado Alley). Analyzing these factors allowed for a much more in-depth understanding of the issues in the second part of the workshop, namely how to capture these phenomena in the mathematical model used to create digital simulations of the processes and forces that occur in a tornado.

During the workshop, participants considered the implications of tornado mediatization – thanks to the use of huge amounts of data used for simulations, the resulting digital tornadoes exhibited properties very similar to those studied in the physical world. The simulations, however, were never a perfect representation of the tornado that occurred – reproducing with sufficient accuracy the factors present at any given time is not possible. Therefore, each modeled tornado is unique and exists only within (or as) the synthetic environment in which it was generated.

The workshop adopted the definition of a model as the extraction of the relevant elements of reality and the relationships between them together with hypotheses, regarding the essence of these relationships and their possible mathematical expression as functioning relationships. Such an approach provokes two questions. First, since the model requires reducing all data to those that the simulation operator considers most important, how different can the output simulations be, depending on external considerations – such as the purpose of the simulation, interpretations of the data, or perturbations of the data?

The second question concerns how to view the simulation – should we treat the synthetic tornado as a model of a physically existing tornado or as an independent phenomenon? In the first case, the simulation is a tool to roughly predict its response and transformation, depending on the change of individual parameters. The tornado is transformed into an extensive data set on which numerical operations can be performed. However, if the second assumption is made, then modeling a tornado is not a process of reflecting a physical phenomenon in a digital environment, but creating a completely new environment that is subject to new rules. In that case, it seems reasonable to analyze how it will change, evolve and react to new elements. The goal of the simulation (which is to reproduce the phenomenon as accurately as possible to determine its behavior) is replaced by a synthetic environment, which can be the subject of research concerning emergent phenomena, the transformation of code and data, or the relationships between objects in the simulation.

WARSAW

Bio-construction workshop // Dr Karolina Żyniewicz // University of Warsaw // 29-30.06.2019

During the workshop led by Karolina Zyniewicz at the UW Institute of Biochemistry and Biotechnology (Synthetic Biology Laboratory), the basic issue was the boundaries (and their blurring) between biological processes occurring spontaneously and those that are intentionally provoked by humans. Participants had the opportunity to learn about the mechanisms of bacterial engineering using so-called biobricks, crafted DNA sequences that can be combined to create a new genetic code and grow the desired microorganism from it.

By actually working in the lab to create new microorganisms, workshop participants were given a special insight into the relationship between nanotechnology and molecular biology, and engineering science and programming. They provided a provocation for a debate on the fluid boundary between laboratories, researchers, technology and wet media, and an attempt to recognize the affective networks of human and non-human subjects.

TORUN

Social Robotics workshop // Magdalena Szmytke // Nicolaus Copernicus University // 11-12.05.2019

The workshop, led by Magdalena Szmytka at the Neurocognitive Laboratory of the UMK Interdisciplinary Center for Modern Technologies, focused on the issue of social robotics and the relationship between humans and their technosphere. The workshop was an in-depth insight into technologies for monitoring human biometric and cognitive functions using so-called wearables and eyetracking technologies.

An important axis of the workshop was the issue of collecting, storing and using data, creating technological dividends that, once acquired by neoliberal actors, can be used as tools of social control and subjugation. Participants searched for possible forms of social debate that would allow the safe use of such technologies, while preserving the ethical principles of the functioning of human-technology relations in capitalist reality.

ENVIRONMENT – LABORATORY – NETWORK

NOTES FROM FIRST MEDIATED ENVIRONMENTS MEETING

POZNAŃ 6-7.04.2019

#1 CYBER-AGRICULTURE AND GENETICS GROUP

Anna Adamowicz / Michał Gulik / Bogna Konior / Miłosz Markiewicz / Jakub Palm

Can contemporary objects – the network, the laboratory, the environment – still be understood through existing epistemological categories? In what ways could we exceed reigning disciplinary frameworks? Simultaneously slippery, vague, rigorous and particular, concepts and objects, seemingly objective in their existence, escape description and discipline alike. And so ‘network,’ ‘laboratory’ and ‘environment’ can be seen as exchangeable and imprecise. Everything can, in the end, be reduced to relations: networks linking environments in a planetary laboratory extend into information webs interwoven in cybernetic laboratories of artificial intelligence.

We can, admittedly, escape this semantic trap by paying attention to particularity. A spider web, a fishing web or the internet are networks that differ in organisation but equally succumb to the rules of intentional communication, even if that means that life is communicating with itself. Deregulation is intrinsic to the contemporary political economy of digital networks and social media. These ‘less hierarchical’ structures were supposed to free us from bureaucracy and power but ended up making them more elastic and pervasive. And the laboratory? It’s hard to think about it without concrete examples: Pasteur, cultivation, offices. Working in the laboratory is, again, intentional and hierarchical – this is where human and object agency meet, hypotheses are verified and knowledge produced. The environment, on the contrary, if it is not a network and not a laboratory, is random, contingent, self-generating. Complex systems or animal umwelts allow for emergence, for the incalculable production of life.

These definitions follow established rules, filling the contours of well-known frameworks. What, then, if we want to think anew, differently, creating new theoretical possibilities? Conceptual experimentation is no free for all; it requires new methodologies. Anne-Françoise Schmid can show us the way through her methodology of ‘integrative objects’ and ‘non-standard epistemology.’ Integrative objects are such objects that do not yet exist, for example, a genetically modified skyscraper. But they are also the objects that demand interdisciplinary work or cannot be sufficiently studied by one discipline exclusively. Instead of thinking through an object by adding one discipline to another (for example, biology, architecture, arts and urban studies), we define our objects as an “x” without precedent, whose parameters are as of yet unknown. “We are unable,” she writes, “to understand certain contemporary objects without excluding something from them, without somehow mutilating the object that we wished to understand.” How can we open objects up to multiple disciplines, unfix them from reigning definitions and designs? These objects, such as climate change or artificial intelligence, “are, at once, acts of aggression and choreographed performances and technological simulations and training exercises.” One of the methods we can use to open them up is suspending their defining trait – something that we currently cannot imagine these objects without. This is where we begin.

Network-without-relations

The network established itself as a prevalent spatial metaphor delineating media ecologies of the present. Its radical conceptual roots in the immanent rhizomatic framework of Deleuzian ontology have long been co-opted by neoliberal modes of exploitation. The internet makes this hijacking most visible: the non-hierarchical peer-to-peer architecture of dispersed communities has been reshaped into a centralized client-server topology (Kleiner). Far from being merely a technical term, network(s) can also describe the condition of contemporary precarious knowledge-work. Networking provides the necessary means of existence, at the same time foreclosing the accomplishments of labour rights movements, such as the once separation between work and leisure, and workload limits. Today, according to Moulier-Boutang, “the network comes to the fore as a form of cognitive division of labour.”

Following Anne Francoise-Schmid’s idea of the integrative object, we established ‘relations’ as the defining feature of networks, and hoped to open up the concept by imagining what a network without relations could be. Experimenting inside this semantic field, we were hoping to move beyond the paradigm of vitalist, relational ontology, to address the often-abandoned notions of autonomy, alienation, and autarchy. Imagining a network without relations, we were seeking to envisage connections that do not immediately form a hierarchical relation of co-dependence. Networks of unrelated actors that nonetheless establish some level of togetherness could potentially reinscribe political urgency into the otherwise all-encompassing, claustrophobic idea of the network without any Outside. By reclaiming autonomy and alienation as positive terms, we sought to imagine potential emancipatory politics heralded by new technologies of deep learning and artificial intelligence. The concept of recursion rather than relation served as a vehicle for thinking alternative modes of production, freed from the networking mechanisms of recapture.

Laboratory-without-tools

The laboratory is a space where hypotheses are verified by falsification in order for knowledge to be produced. A feature distinctive for the laboratory is the presence and the possibility of using tools. It is thanks to them that the falsification processes can be carried out. Regardless of the tools, laboratory conditions are artificially assembled rather than “natural” – they have little to do with reflecting reality as it exists outside of the laboratory. Out-of-the-laboratory conditions are instead suspended in the laboratory space.

Laboratory tools introduce new stimuli into the workflow. Laboratory tests are therefore speculative (hence the need for the process of falsification). The tools (in themselves) also have an impact on test results. So if we remove the tools from our laboratory space, we are left in a space where agency works differently. Perhaps, without the intervention of tools, this could be a “pure agency,” unmediated and not dependent on the alterations of measurement? In this case, however, action would be suspended – it would be closer to pure potentiality. The hypotheses that could be found in such a laboratory, even if true, could not be verified. If, however, we retain the principle of laboratory production of knowledge – without falsification – then our knowledge will turn out to be a fiction. At one extreme, this might render it close to conspiracy theories or denialist theories. On the other, as Schmid writes, a generic epistemology requires fiction, which stands not for “the unreal” but “the unknown, the heterogeneous and the future.” “Fiction,” she writes, “is a method that allows us not to reduce an object or a discipline to the state of a fact, to its known objects, but to extend it to other series of knowledge.”

Laboratory-without-tools transforms the production of knowledge into the production of causative fiction and of modelling. The laboratory-without-tools is a space where hypotheses meet, and – due to the suspension of external disciplinary rules – may be subject to various intra-actions, including those with laboratory itself. This fiction could be so strongly causative as to jumpstart the production of further hypotheses, statements and narratives, opening up the laboratory space to yet unforeseen mechanisms. It has the character of fundamental knowledge even though ontologically it turns out to be a simulacrum.

Environment-without-space

One could assume that in the triad of ‘environment’, ‘laboratory’, and ‘network’, the last one is a meta notion, a term descriptive of the intertwinement of the first two. The relations between the designata of ‘environment’ and ‘laboratory’ are multitudinous—in each and every environment a particular subset, i.e. a laboratory, can be delimited; an environment can be comprised of many laboratories.

An environment is directly determined by physical laws and so are its objects and relations. All events that occur in a given environment are causal and thus a form of recursivity characterise the set itself—the environmental state is volatile because its objects acquire new properties by interacting with each other. Part of any environment, laboratories, though more controlled, are subject to the same causality by definition. They examine specific environmental phenomena and export environmental artefacts. However, laboratories are also affected by social conditions: competition, inspiration, policy, funding limitations, and so on; indeterminists can see these relations as independent of the aforementioned laws, yet it is safer to assume that some dependency does take place. Those socially prone—laboratory agents —are part of the environment themselves; they should be recognised as indirectly determined, at least.

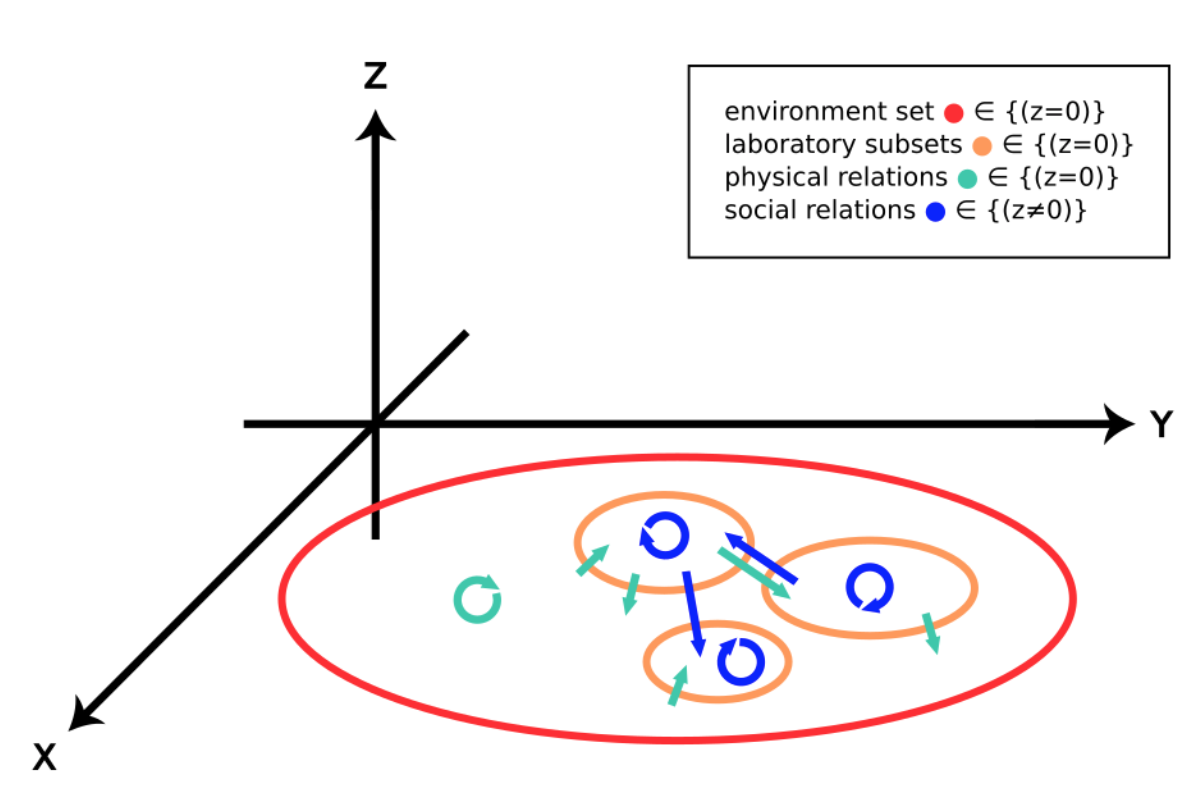

For illustrative purposes, the three-dimensional Cartesian coordinate system presents the environment set plotted at XY-plane (z=0)—the only coordinate plane that is directly determined by physical laws; the indirectly affected one, namely social relations, is z≠0.

For illustrative purposes, the three-dimensional Cartesian coordinate system presents the environment set plotted at XY-plane (z=0)—the only coordinate plane that is directly determined by physical laws; the indirectly affected one, namely social relations, is z≠0.

——–

Moulier-Boutang, Yann. Cognitive capitalism. Polity, 2011.

Kleiner, Dmytri. The telekommunist manifesto. Amsterdam: Institute of Network Cultures, 2010.

Schmid, Anne-Françoise, and Armand Hatchuel. “On generic epistemology.” Angelaki 19.2 (2014): 131-144.

Schmid, Anne-Françoise. “On contemporary objects.” Simulations, Exercise, Operations, ed. Robin McKay, Urbanomic, (2015): 63-68.

#2 DEEP SEA MINING AND ANTHROPOCENE GROUP

Aleksandra Brylska / Franciszek Chwałczyk / Maciej Kwietnicki / Maksymilian Sawicki / Aleksandra Skowrońska

We approached the laboratory, the network and the environment as certain ontoepistemologies (Barad 2007). On the one hand, laboratory, network and environment are tools for cognition, and on the other, tools for shaping, or even determining, the essence and form of what is known. Those two sides are inseparable from each other. The question how these different perspectives are linked to each other and how they can be seen and shown was one of our main interests.

Laboratory

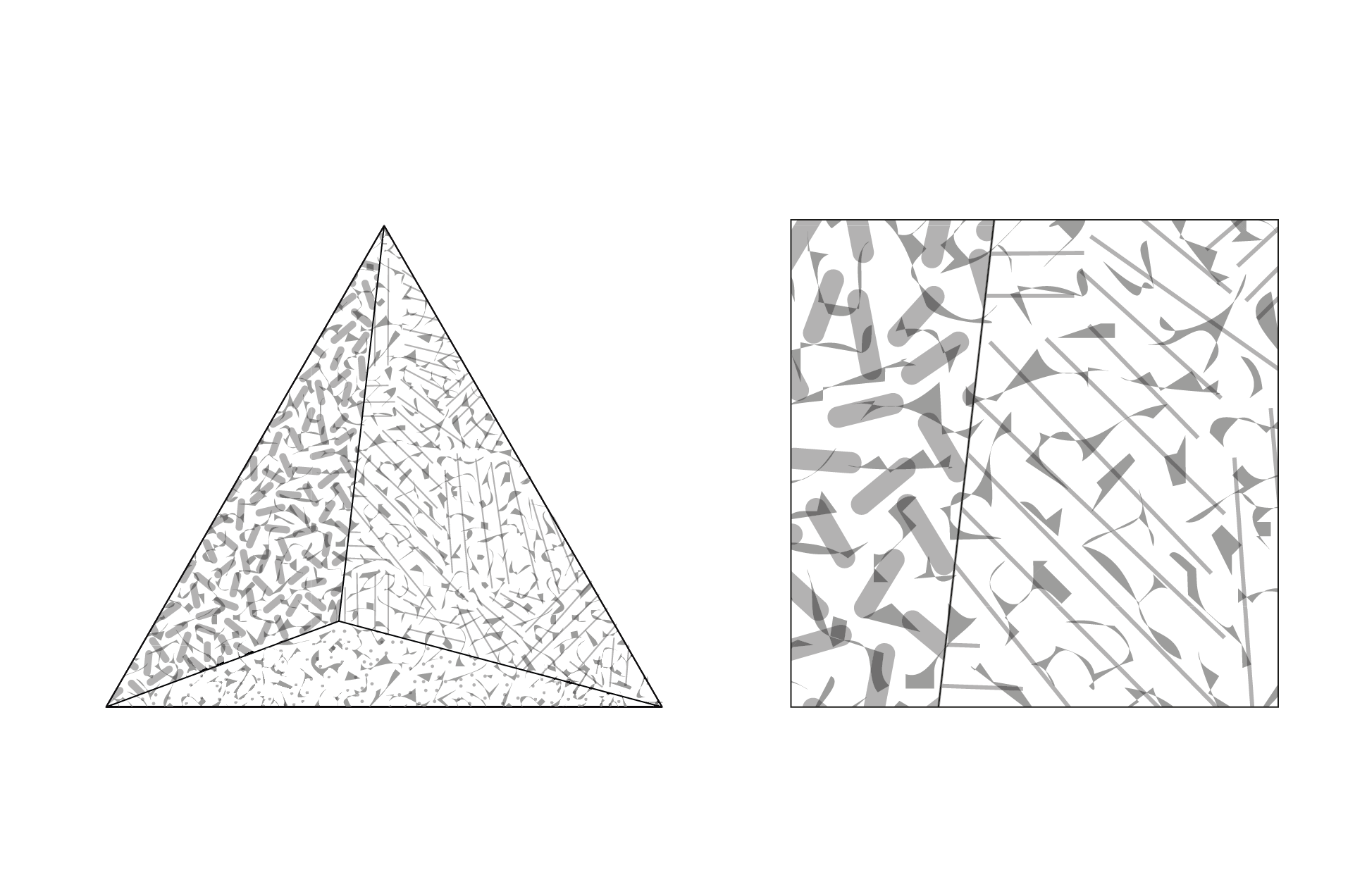

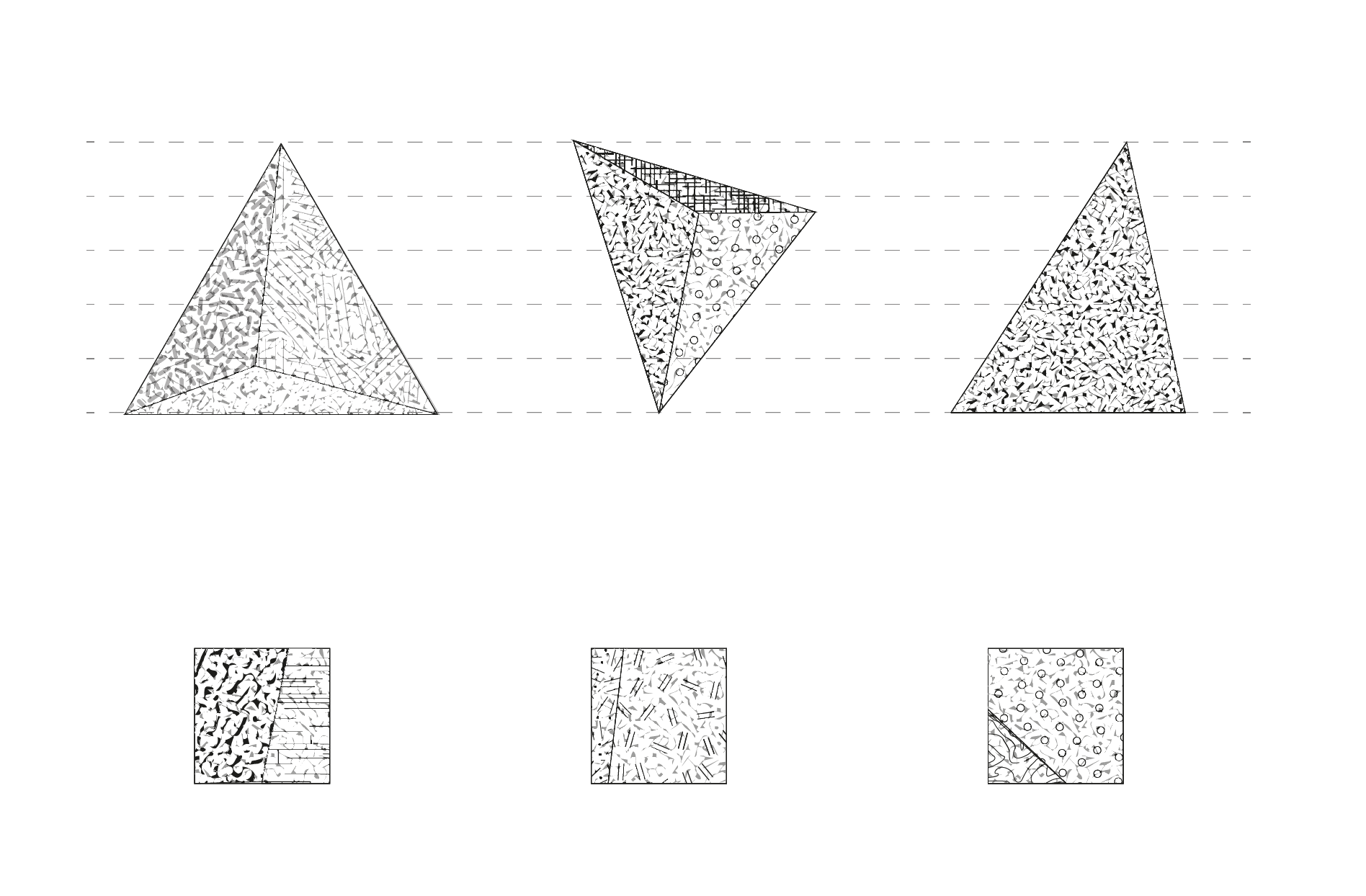

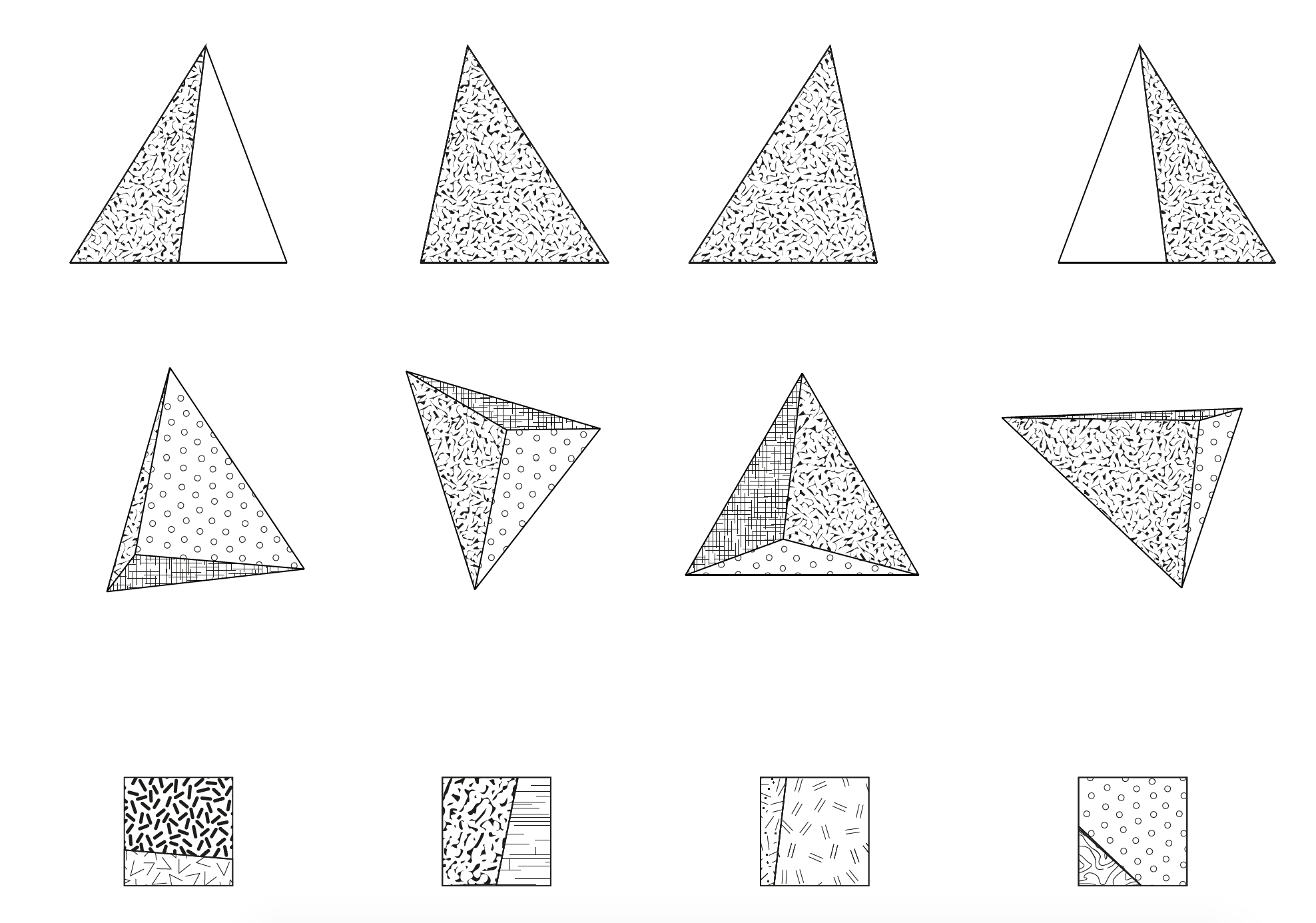

The laboratory appears to be a network of environments and an environment of networks. However, working in laboratory means to work with the scale – it’s the process of cutting out a piece of a given reality, transferring it into a recreated environment, manipulating it and then transferring it back to the changed environment. Laboratory activities seem to “flatten” the studied phenomena to – depending on the subject of research – samples, scaled equivalents, or concepts. On the basis of “flattened” research objects, they try to confirm or deny a given hypothesis or track the relationships and mutual influence of objects on each other. Hence our “inscription” we drew in a laboratory style – one can see there idealised entities, clearly delineated environments, connecting lines on the network, symbolic meaning marks (not forgetting about tools and method).

One can see here how, on the one hand, “laboratorization” consists of constructing and discovering connections between different environments and networking them. On the other hand, it is based on the construction of (hyper-)environments for these networks – from recreation of the natural environment in the apparatus for the sample (appropriate temperature, sterility…), through the biological environment of the bodies of scientists (so that the knowledge-creative processes can take place in them) and the symbolic environment (in the form of mind content, external carriers and interfaces) to the socio-political-economic environment (funds, stability of professions and employment, political stability, social consent) for the functioning of the laboratory.

This shows that the laboratory is much more than a sterile system borrowing a piece of “external” material. Ultimately, the whole reality can be considered as a laboratory – on the scale of the universe it is a simulation or a big collider, on the galactic scale – Newtonian spheres, on the scale of the planet it is the laboratory of evolution (testing species in contact with environments).

Network

From the ideas of the Internet and its woven cyberspace, through theories of graphs and neural networks, theories of networks as noise, to Action Network Theory: today, network as a way of thinking and model of reality is everywhere. However, this network, as in any of the examples above, is quite specific. It usually serves as a – very idealized – representation. These are visions of clusters of larger or smaller points between which there are thicker or leaner straight lines (connection representations) present in an unspecified space. During our discussion and work on this concept, we felt it was necessary to visualize the network in order to – in McLuhan’s way (making the network a subject in itself, not what is being used to illustrate or transmit it) and in Kittler’s way (asking about network matter, hardware) – make it material, visible (develop this visible “roll” in which we “found it”). In this way we could see these entanglements and think about crossing them.

Environment

The environmental discussion started with an attempt to define our understanding of the term. The discussion about the concept of Nature and its cultural conditions turned us towards two paths. One of them would have required us to abandon Nature in favour of the concept of the environment, which was also problematic for us. The second path led us to reflect on the politics of the concepts of laboratory-network-environment, and then to try to overcome the difficulties involved. We proposed replacing the concept of Nature with Natur, reversing the power relationship and disenchanting the sexual implications that followed the earlier form of the concept.

Here, our intuitions have moved towards relational thinking, where relationships are primordial and objects constitute objects, not primordial objects that enter into relationships. This has led us to think more about fields, planes, tensions and vectors, interpenetration and overlapping, and thus release (not through borders, but through tendency or attraction in one or the other direction – also with the possibility of a point of equilibrium, between the two, and thus perhaps in something third) and create a new one.

Here we have turned to the emergent phenomenon of color. Its existence is debatable on its own. But it is possible because of specific environments: physical (light and its reflection); biological (human nervous system from eye to brain, evolutionary adaptations resulting from interaction with these other environments); and cultural (ability to distinguish between colours and to give meaning to colours). It also makes it possible to blur or even lead to the disappearance of the border – where two meet and overlap, a third one is created, which is difficult to separate from both, and which becomes a border itself).

At the same time, we have managed to present the relationship between laboratories and environments (from the point of view of the latter). You can see here how a laboratory essentially consists of borrowing conditions from one environment and mediating them in another – it is itself a hybrid environment.

Figure

Each of the above mentioned ontoepistemologies may serve to grasp the remaining or some fragment of reality – not only showing something that others are not able to do, but also (co-)constituting an object. According to the ontono-epistemological thinking, it is impossible to distinguish here between the tools of research and its subject. Connecting to a given network (in order to gain access to it at all) and every time we try it, researching through interactions we change its structure, weights, nodes. Entering a given environment, it becomes our environment, we ourselves become its part – we locate ourselves in this field and disrupt its system (and it disrupts us). And by “laboratoryizing” something – cutting out a piece of a given reality, moving it into a recreated environment, manipulating it and then moving it back to the changed one – we create a conjugated system that cannot be separated into apparatus and investigated reality (as Karen Barad shows on the example of physics).

Therefore, in order to continue our deliberations, we proposed a three-dimensional geometric representation in which each wall represents one of the above mentioned ontoepistemologies. In this way you can take the figure in your hand and look through one of the walls at a given object (or create it by this look). At the same time, the other two, connected with the one you are looking through, are seen further down the line. It is also impossible to look through more than one at a time – although it is possible to change them. It is a trinity wall.

Meanwhile, inside this figure we have everything that is not “pure” enough and subordinated to a given ontoepistemology – periphery, noise, otherness, disorder, “garbage”. Everything that is not included in it (or in any of these three) or is not visible or noticeable at all. However, the figure cannot exist without the middle – noises, peripheries are necessary and constitutive for each of the planes and connections between them. At the same time, this medium, its content, is that in which there is potential and something new can be created, thanks to vagueness, hiding in the shadows, coincidence and openness. What we are interested in is the interior of the tridist wall and the paths that pass through it, connecting these different walls – descending under their surface and emerging on the other side.

Foucault’s Trihedron

In an attempt to find a place for humanities in science, Michel Foucault proposed a similar figure: the trihedron of knowledge. Starting from the observation that not everything in science can (and should not) be mathematized (while knowledge does not extend only in one dimension the axis of formal purity – empiricism), he proposed a trihedron. One wall is made up of mathematical and physical sciences (in our case it could be the wall of a network), the other of language, life, production and distribution of wealth sciences: “which order discontinuous elements, but analogous ones, so that they can establish causal relations between them and structural invariables”. (Foucault, 2006, p. 311/2005, p. 378) – this could be the wall of the laboratory in our case – and the third: philosophical reflection (this could be the wall of the environment in our case). On the wall’s meeting point, there are appropriate interactions.

According to Foucault, humanities are excluded from these levels. However, they nest in cracks and stretch between, filling the interior of the triad formed from these plateaus. They move and reflect on the desire for formalization (networks), refer to biology, linguistics, economy (laboratory), try to connect with philosophy (environment). These are unstable sciences, with no permanent or specific place in the structure of knowledge. The author points out that due to such flexibility, their unstable status of science is threatened. At the same time, they pose a threat to other sciences, a threat to usurpation or replacement, or to relieve, or the possibility of universality.

We, as representatives of this science (and some of its neighbors) and interested in these levels and what circulates under their surface, try to dive into the interior of this trihedron and to surface not necessarily in the same place, but move between these three sciences/three ontoepistemologies, trying to develop meanings and theoretical tools.

———

Barad, K. (2007). Meeting the universe halfway: Quantum physics and the entanglement of matter and meaning. Dirham & London: Duke University Press.

Foucault, M. (2006). Słowa i rzeczy. Archeologia nauk humanistycznych, Gdańsk: Słowo Obraz Terytoria. / Foucault, M. (2005). The Order of Things. An archaeology of the human sciences, Routledge: London and New York.

#3 PALM OIL AND NETWORK DISTRIBUTION

Jakub Alejski / Aleksandra Borys / Przemysław Degórski / Anna Paprzycka / Mikołaj Smykowski

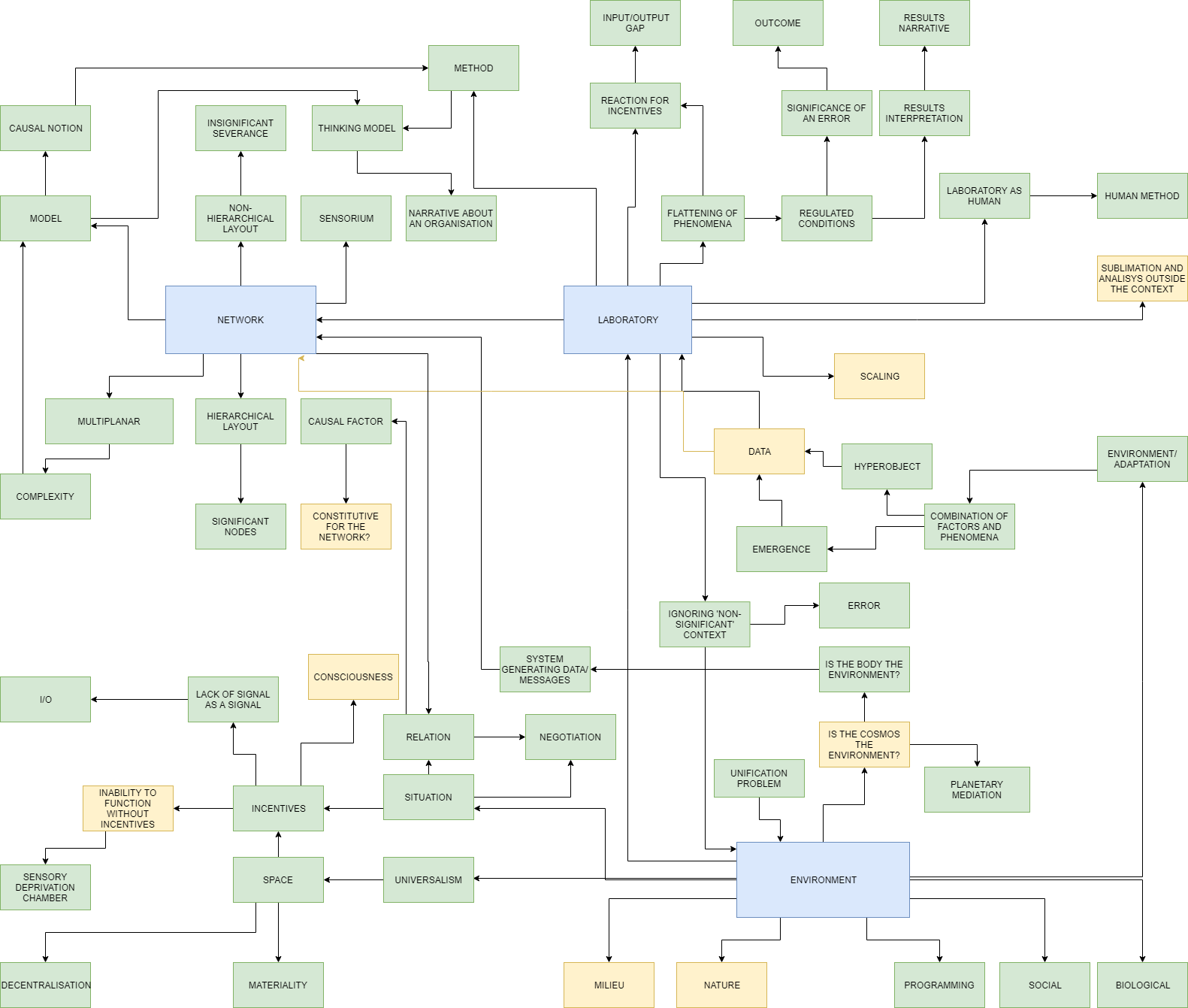

The graph was created to map the development of a collective brainstorm. Allowing very intuitive process of selection and organization of the dynamic though development during our discussion to flourish. The raw draft is a recollection of our non-linear discussion and presents the complexity of our attempt to articulate our like ways ideas.

Taking ENVIRONMENT as a starting point and key concept we faced the problematic on agreeing : whether it needs to be defined by human’s or other obstacle’s perspective? Could an empty void be an environment as well? Taking this idea into micro and macro scale we discussed the differences of our relation to outer and inner bodily experience of human habitat.

In search for environmental boundaries and ideas of infinity with boundaries, we referred to radical and ambiguous cases. A vacuum as an example of absolute emptiness, the medium without living entities. But what about inanimate, inorganic objects and stimuli such as light or electromagnetic waves? Would a human body in a technologically mediated artificial environment such as a space suit belong to the external, larger-scale environment? Could we theoretically disconnect it from the surrounding vastness of cosmos? Can it be that by looking for the boundaries we are facing the concept of interconnection of beings – creating environment?

Expanding on questions concerning relations between the environment and the environmental stimuli, we investigated the case of a sensory deprivation tank. A “state of suspension” achieved by cutting off all the external stimuli. Such experiment requires to design an „unnatural” environment that contains specified properties like density and temperature. In this case we examined whether there is a distinction between “unnatural” and “natural” environment. Anything created by human is an effect of the “natural” resources combined in a particular way we tend to call “unnatural”.

Going deeper on our journey down, down, down the whole of articulation we began wondering whether the presence of physical matter is essential for the environment to exist and thus, can it be defined by following data, information and relations which determine its functionality? Is it possible for living entity not to perceive and experience its environment? What about its “dark side” inaccessible for human cognition (e.g., Timothy Morton’s hyperobject or quantum phenomena that the human perception usually cannot reach)?

The concept of LABORATORY was the next off mark point on the graph and in our discussion. Following up on the ideas on created, artificial, sterile environment it felt like a organic bridge onto the next topic. The laboratory appeared to be a kind of mathematical projection that is not able to convey the entire problem of reality, but only a simplified picture. We debated on the relations of prediction and failure as a most interesting and creative aspects of this situation. By understanding the laboratory as a fractures in “input / output” layout, the filtration of reality takes place, which is aimed at proving the non-existence of errors. An error (noise) and information incompatibility is usually perceived as a side effect and marginalized. And the mere fact that the process is forced into the artificially framed reality will result in the loss of valuable information that can be accentuated beyond the laboratory conditions. Of course, as in the environment, here also the problem of scale, process management, and the narrative nature of laboratory experiments emerges. The interesting aspect of laboratory was an idea of control over it, including the control over possible error, which assumes a certain hierarchy of results, processes, data.

NETWORKS was the third aspect of our debate at the same time the grid demonstrated itself as a satisfying visual representation of it. The network, following the trail of cybernetics, assumed a decentralized structure, in which the accent falls not only on the constituent elements, but on the relationships that take place between them. The network can act as a reliable information system that does not only have an uni-directional arrowhead. It can be a model for describing the complexity of biological, atmospheric or tectonic processes, including related deleuzian rhizomes, human and other-than–human actants.

However, penetrating deeper and deeper into the network, it may turn out that the huge number of factors may make up the loss of the ability to perceive them all at the same time. Therefore one can be condemned to the filtration of individual data (this network takes the form of a sieve, a fishing net). By choosing the network model the autonomy of its individual elements may be easily forgotten, which is reminded by Jane Bennett in Vibrant matter.

It became puzzling, whether in the network model the relationship itself can be assumed as an efficient actant? Is it possible to talk about a relationship without objects that interact through it? Or is the creation of a network just an excuse to exchange information with external objects?

Here as well we challenged the concept of network examining it from sensorial perspective to question human body where data does not have to go through the network structure of the body, but take place outside of it, engaging its external point.

The interdependence and inter-relations between network, laboratory and environment became clear. The debate returned to emphasize the diffusion and of all three concepts. The more we focused on the essence of those ideas, the more we see the commonality in them.

———

Bennett, J. (2010). Vibrant Matter. A Political Ecology of Things. Durham & London: Duke University Press.

Morton, T. (2013). Hyperobjects. Philosophy and Ecology after the End of the World. Minneapolis & London: University of Minnesota Press.